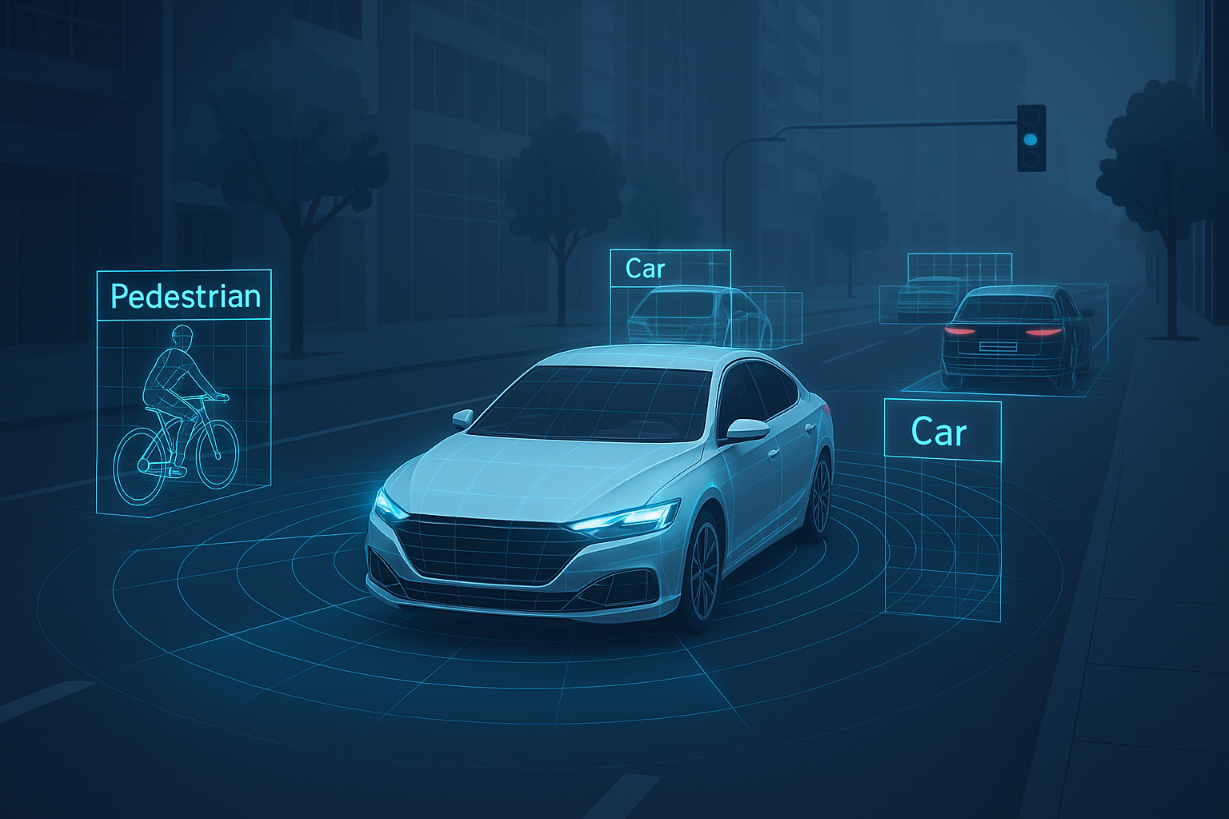

Perception: The Foundation of Autonomous Intelligence

When talking about autonomous systems, whether it’s self-driving cars, warehouse robots, or delivery drones the spotlight often lands on the end goal: full autonomy. But before any of that can happen, the machine needs to do something far more fundamental – understand its environment. That capability is called perception, and it’s the unsung hero that powers everything from safe navigation to intelligent decision-making.

What Is Perception in Robotics?

In technical terms, perception is the combination of sensor data fusion, object detection, scene understanding, and localization. It allows a machine to build a real-time, high-fidelity model of its environment.

Most systems rely on a combination of:

- Cameras – for rich visual data and semantic context.

- LiDAR – for precise 3D depth information and obstacle detection.

- Radar – for robust sensing in poor weather or lighting.

- Ultrasonic sensors – for close-range proximity awareness.

- IMU and GPS – to track motion and global position (when available).

Perception algorithms process this multi-modal sensor data using techniques like SLAM, neural networks, and point cloud segmentation to extract useful information: where things are, what they are, how they’re moving.

Why Perception Matters

Autonomous mobility hinges on the machine’s ability to:

- Detect and classify objects (vehicles, pedestrians, signs, animals, etc.)

- Estimate distances and predict trajectories

- Identify drivable surfaces, lanes, and environmental features

- React to dynamic and unpredictable elements in real time

Any failure in perception compromises safety, decision-making, and user trust. That’s why perception is often the most complex and computationally demanding part of an AV or robotic stack.

The Role of AI in Perception

Recent advancements in deep learning, especially convolutional neural networks (CNNs) and transformer-based models, have significantly improved perception accuracy. These models enable semantic segmentation, instance detection, and motion prediction—critical for understanding complex urban environments or cluttered indoor spaces.

But perception isn’t just about labelling pixels. It’s about creating context-aware understanding, for example, knowing the difference between a parked car and one that’s about to merge.

In Summary

Perception is the “eyes and brainstem” of any autonomous system. Without it, autonomy is blind. With it, machines can interpret the real world with remarkable precision and act safely within it.

As autonomy continues to evolve, perception remains the bottleneck—and the breakthrough. It’s the frontier where computer vision, machine learning, and robotics converge to make intelligent mobility a reality.

If you’re looking to hire experts in Perception to spearhead development of autonomous mobility, contact david@akkar.com